The Human Side of AI: Lessons from a CareOps Chatbot

Date

Aug 29, 2024

Category

CareOps

Author

Rik Renard

Making data-informed decisions is the heart of exceptional care. Or, at least, it should be. But anyone who's worked with healthcare data knows that accessing and interpreting it is far from a walk in the park - it's more like navigating a labyrinth, blindfolded.

Information is scattered across EHRs, spreadsheets, handwritten notes - you name it. And even when it’s all in one place, data alone means nothing without the ability to analyze it for meaningful insights.

Adding to the challenge, most providers don’t have dedicated data teams, and even when they do, those teams are swamped. A simple data question can take days, even weeks, to answer - only to lead to more follow-up questions and more waiting.

That's where Shelly 🐢, our CareOps AI Assistant, comes in. We developed Shelly to democratize healthcare data through Conversational Analytics. This AI-powered companion provides data answers and insights through natural, intuitive conversations, making data insights accessible to everyone on the care team.

Building Shelly was a true journey - full of wins, failures, and facepalm moments. But most importantly, it led to invaluable learnings that we're eager to share.

Our hope? To save you a few headaches (and perhaps a tear or two) on your path to developing AI in healthcare.

Curious about the 8 key lessons we learned while building Shelly? Keep reading.

Spoiler alert - it often looked something like this:

Lesson 1: Treat A Multi-Agent System Like a High-Performing Team

As we began mapping out the complexities within our data, defining requirements, and outlining Shelly's tasks, it quickly became clear: this wasn’t just about building a simple text-to-SQL tool - we needed a whole team - a multi-agent system.

Each agent needed a clear role and responsibility, specializing in its area.

What we learned is that expertise and defined roles weren’t enough. What was even more critical was a shared understanding of the goal and how well they collaborated. Thinking about it, it’s remarkably similar to the art of building a high-performing “human” team - real magic happens when everyone knows their role and works together seamlessly.

Thinking about it even further, I am incredibly eager to see how the most efficient human + AI agent teams will be structured. But I digress - back to building agentic systems.

Here's how we structured our "team":

Planner: The team captain, managing the flow of tasks between agents and deciding the best way to present the final answer - text, visualization, or table. The Planner also ensures smooth operation and serves as the system's main guardrail.

Entity Extractor: The detail-oriented specialist, extracting key terms from user queries and matching them with existing data to eliminate ambiguity.

SQL Agent: The powerhouse, turning natural language questions into complex BigQuery queries, handling errors gracefully, and delivering data answers.

Visualization Agent: The designer, converting raw data into insightful visualizations ready for display.

Table Agent: The organizer, structuring data into tables and ensuring it's formatted correctly for display.

Lesson 2: Embrace Going Big, Then Scaling Down

To make these agents work together, we followed Andrej Karpathy's advice for LLMs: "go big, then scale down". Start with the most powerful tools, prove the concept, then optimize.

For me, this approach is always a battle against old habits. You know the drill - “start with linear or logistic regression as a baseline and then scale up”, anyone? No??? Okay, moving on.

This time, we embraced the challenge and went all in. We chose the best-performing models - mostly GPT-4 at the time, and implemented ReACT agents across the board, leveraging function calling, a few-shot examples and RAG where needed to guide their reasoning.

After only a few long nights (and a bit of crying out loud, and considering a career change), we got the system working - better than my pessimistic self had expected.

Here’s the key takeaway we re-learned: when time is tight, focus on proving your approach works. Once it does, you can scale down and optimize. Sometimes, going big from the start isn’t just bold - it’s the smartest move you can make.

Lesson 3: Embrace the Future, One AI Leap at a Time

Getting Shelly to work was a huge win for us. But then came the hard part: making her fast and efficient. We set ambitious goals: 3x faster, 5x cheaper. Easier said than done.

We experimented with different LLMs, streamlined agent processes, and became obsessed with minimizing those pesky tokens.

Despite our best efforts, we barely met our first target and managed to cut costs by only three times.

And then, a miracle: OpenAI released GPT-4o. Incorporating it into our experiments sped things up by 80%, made us 5x less expensive, and improved performance - overnight, literally.

This experience brought to mind something Sam Altman often says (I’m paraphrasing):

“Do not build your AI systems with current constraints; build for future capabilities.”

I might not agree with the man on everything, but after this easy win, I’m sold on this philosophy.

The main takeaway (note to my pessimistic self): In AI, what seems impossible today might be a reality tomorrow. So keep building, keep experimenting, and never underestimate the power of a breakthrough - it might just deliver exactly what you need.

Lesson 4: Don't Underestimate the Power of a Well-Placed Comma (aka Prompt Engineering)

Something we’ve repeatedly re-learned while working on Shelly - good prompting can take you incredibly far, even in agentic and multi-agent systems.

There’s really only one key lesson worth pointing out here, and I’ll let a better messenger deliver it:

Lesson 5: Don't Let Your AI Get Lost in Translation

LLMs are smart, but they don’t magically acquire years of specialized knowledge or understand your unique business language. You have to “teach” them the nuances with clear definitions and examples.

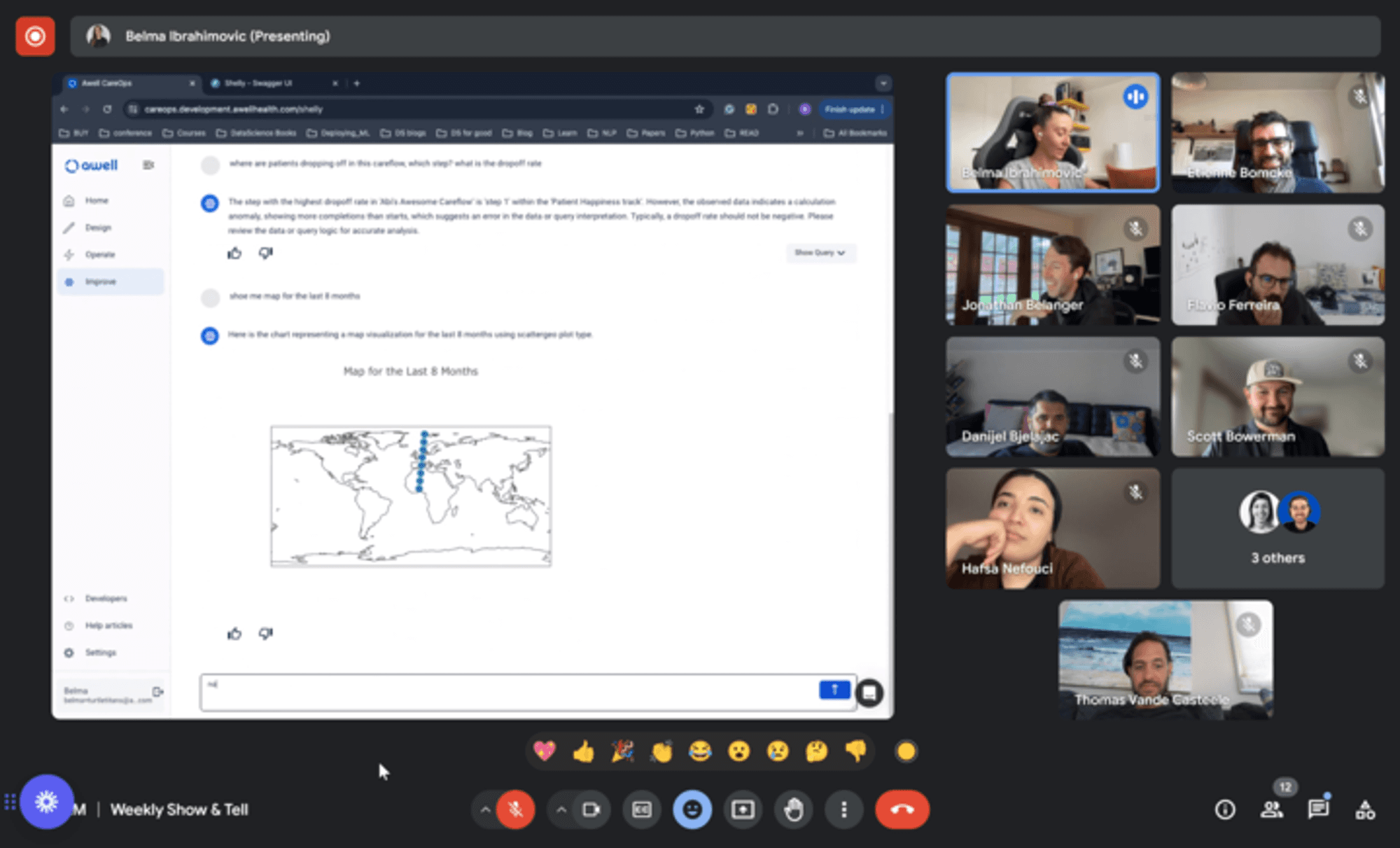

I thought I had this covered - until one of our internal demos. During a live demo, Shelly was asked to provide a MAP, which in Awell-speak stands for Monthly Active Patients.

Shelly, bless her artificial heart, completely missed the context and generated an actual map. 🤦♀️

So, triple-check your definitions, test rigorously and remember that Domain knowledge is crucial- lesson learned!

Lesson 6: Early Customer Involvement is Non-negotiable

We built Shelly with care, following the best UX practices - equipping her with clear explanations, helpful tips, and even a touch of humor. We also let users peek behind the curtain at her SQL queries.

And then, we introduced her to the real world. And it was… an experience.

It was beyond painful to watch Shelly fail spectacularly at times and frustrate our beta testers, but those moments were pure gold for improvement. Our users quickly showed us what we couldn’t see ourselves - highlighting areas where Shelly needed more clarity, better responses, and, yes, even a bit more charm.

We re-learned a valuable lesson: You can't build a truly useful AI system in a vacuum. You need real users, with their messy, unpredictable questions and workflows, to reveal the gaps between theory and practice.

Huge thanks to our amazing customers who went on early Shelly 🐢 journey with us!

Lesson 7: There is Such a Thing as Over-Guardrailing

Guardrails are essential to keep your AI system from going off the rails (pun intended!), but there's a delicate balance between responsible guardrailing and turning your AI into a strict, joyless rule enforcer.

Initially, I was so focused on preventing Shelly from answering unwanted questions that we went too far.

The result? Watching our beta-testers’ faces turn red with frustration as Shelly repeatedly served up

"I am sorry, I cannot help you with that."

Again. And again. And… well, you get the picture.

We learned the hard way that guardrails need to be more than just a bunch of "don'ts." Instead of shutting down conversations, they should guide the user, offering explanations and alternative paths rather than dead ends.

Lesson 8: Don't Forget to Make Your AI Smile

As a thank you for sticking with me this far, I want to leave you with one personal learning - how the story of a chatbot fundamentally changed my perspective on developing AI for healthcare.

At the start, I was laser-focused on the technicalities - ensuring Shelly had enough guardrails and that every response was professional and efficient.

But then came the beta-testing phase. As real-world feedback poured in, I became obsessed with fine-tuning every technical detail to address the issues we uncovered. My focus remained entirely on the mechanics.

Then Thomas, our founder and CEO, said something that completely shifted my approach:

"Let's make Shelly fun as well - healthcare needs more humor."

And it hit me - technology like this can be so much more than just a tool. It can transform lives, add a touch of brightness to even the most challenging situations, and become an integral part of our world.

Yes, we need AI that's powerful, precise and safe. But we also need AI that’s engaging, approachable, and dare I say it, a little bit fun. AI that truly enhances our lives, not just automates them.

The future of healthcare with AI isn’t just about better tools; it’s about creating a system where humans and technology collaborate to deliver care that’s more efficient, effective, and patient-centered. A system that preserves the human touch and makes everything easier, even when it’s hard.

And that’s a future I’m incredibly excited to help shape.

🎬 Wrap Up

That has been quite a journey. Even with everything we've covered, I’ve only just begun to scratch the surface of what went into creating Shelly and the humble lessons we’ve learned along the way.

If you’re curious about the technical details or have any questions, don’t hesitate to reach out.

But this isn’t just about Shelly - it’s about the future of healthcare with AI. A future filled with endless possibilities and important questions.

What excites you about this future? What scares you? I would love to hear your thoughts.

Let's continue the conversation and shape the future of healthcare together, one lesson and insight at a time.

Back